Using TensorFlow TensorBoard

Note: The purpose of this post is as a personal reflection and not as a tutorial.

When doing machine learning research, it is important to be able to have real time visualisation. TensorBoard allows such visualisation. This post will document my experiments utilising TensorBoard.

TensorBoard Guides

I Looked at these guides:

- TensorBoard Getting Started Guide

- TensorBoard Scalars: Logging training metrics in Keras

- Displaying image data in TensorBoard

- Examining the TensorBoard Graph

These TensorFlow API references are also relevant:

Logging Audio

TensorFlow API reference for logging audio.

speech_4was a list of 1D numpy arrays representing mono audio.transcriptswas a list of strings representing the spoken characters in the corresponding audio.

# Clear out any prior log data.

!rm -rf logs

# Sets up a timestamped log directory.

logdir = "logs/train_data/" + datetime.now().strftime("%Y%m%d-%H%M%S")

# Creates a file writer for the log directory.

file_writer = tf.summary.create_file_writer(logdir)

for i in range(4):

print(i)

audio = speech_4[i]

audio = audio.reshape((1, -1, 1))

description = '**Transcript**: {}\n\n'.format(transcripts[i])

with file_writer.as_default():

tf.summary.audio("Audio " + str(i+1), audio, 48000, step=0, description=description)

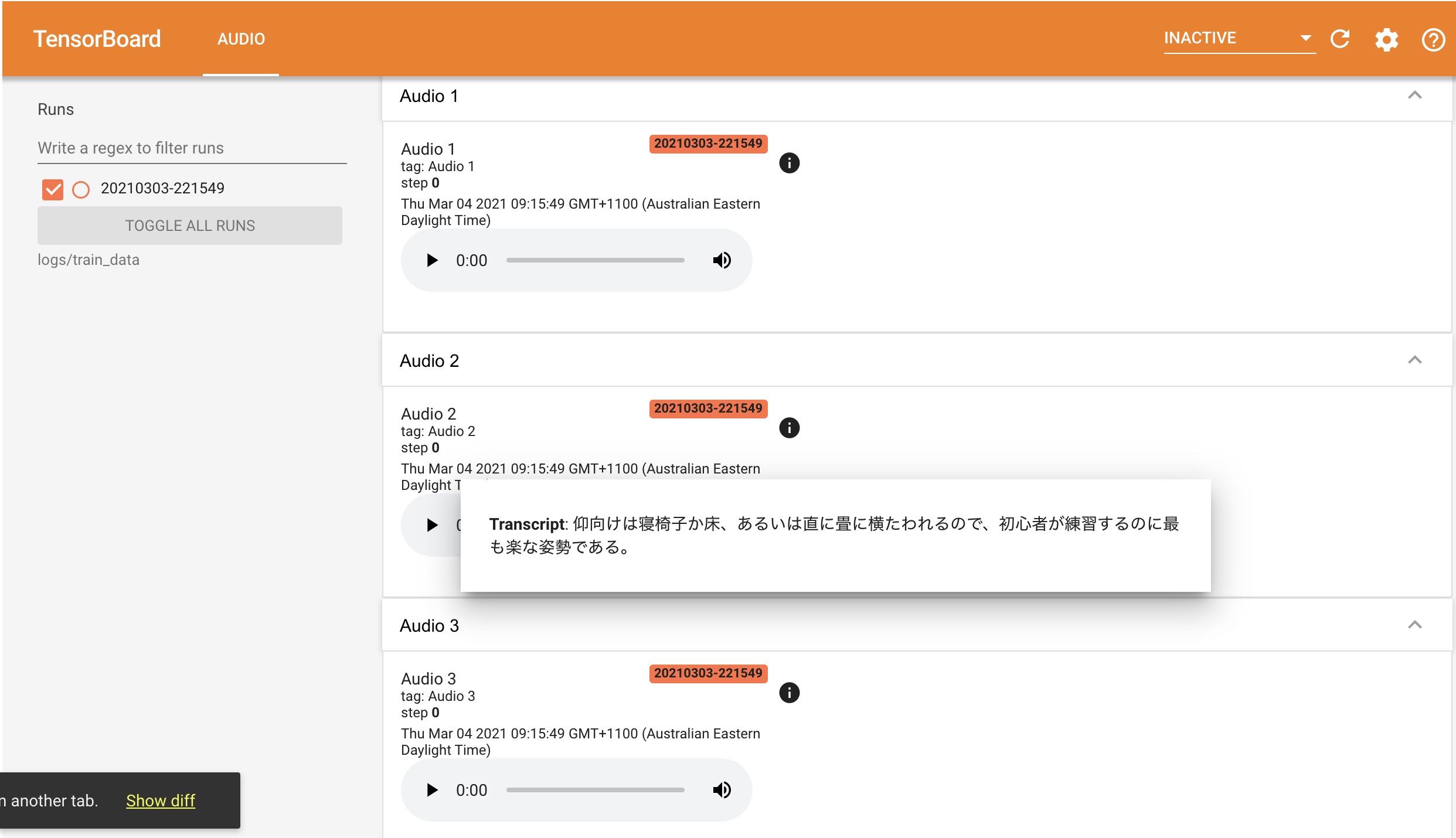

How the audio appears in TensorBoard:

- The description with the transcript can be viewed by clicking the information symbols.